Understanding and Translating Chatbots

Chatbots are created for a purpose. Whether it is to entertain the user or to carry out some tasks, it interacts with the user. To build a chatbot, simple hard-coded rules can be written or NLP models can be made use of. In either case, data is the key to success.

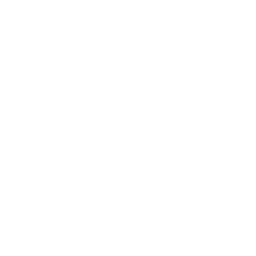

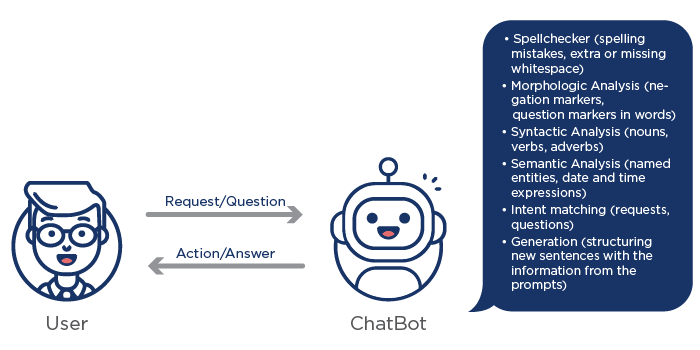

Simple pipeline of an interaction with a chatbot is as follows:

Rule-Based Chatbots

These kinds of chatbots are designed in a simpler way, and the rule of thumb is to have as many unambiguous examples as possible. Very simply, these chatbots are working on if-conditions by looking for an exact match to take an action or to respond to a question.

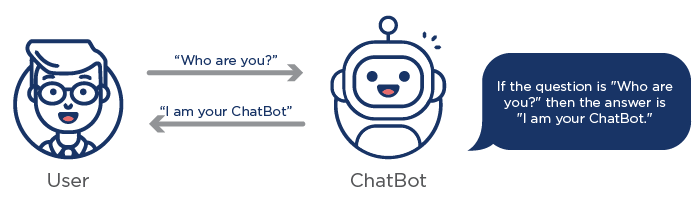

To give an example, if it is designed in a way to respond to the question, "Who are you?" with the answer, "I am your chatbot," then the pipeline would look like this:

This simple rule can be made more sophisticated by adding “ANDs” or “ORs” to increase the coverage because if this is the only rule hard-coded, then the chatbot won't be able to respond to, "Tell me who you are."

This kind of question-answer or request-action structure is designed to understand the user intent. Many more questions can be predefined for, "introduce yourself" intent, and if the user uses one of these questions, as there will be an exact match, the corresponding answer will be triggered.

Importance of Data in Rule-Based Chatbots

As this kind of chatbot relies on predefined prompts, market research on necessary intents and collection of possible prompts is of the utmost importance. For each prompt failing to trigger an action/answer, the chatbot can rely on a fallback answer such as, "Sorry, I didn't understand." However, this is a sign of a lack of data in the prompts repository. In order to have a successful chatbot relying on predefined rules,

- A list of intents must be gathered and decided on depending on the market:

- Is your chatbot working for a specific purpose such as making money transfers or answering questions about the weather?

- What possible intentions can your users have while using the chatbot?

- A list of possible requests/questions that your users can use must be collected:

- What are the possible ways of requesting the same action or asking for an answer?

- Are there synonyms in the same sentence that can be replaced easily?

- A test set must be created:

- What requests/questions should trigger a specific intent?

- What requests/questions should NOT trigger a specific intent?

These steps prove the importance of data in the creation and testing of the chatbot. The quality of the chatbot can be observed by checking the numbers for the following cases:

- the prompt X triggered the intent X correctly (true positives)

- the prompt X didn't trigger the intent X while it should have (false negatives)

- the prompt Y triggered the intent X while it shouldn't have (false positives)

- the prompt Y didn't trigger the intent X as expected (true negatives)

However, the chatbot is still limited to what is already predefined. To overcome this problem, the focus should be directed to AI-powered chatbots.

AI-Powered Chatbots

When compared to rule-based chatbots, AI-powered chatbots are more complex and provide bigger coverage. The content of the intents is filled by using training data sets in high volumes. Depending on the needs and desires, the internal structure of the chatbot can look as follows:

The steps to make your chatbot smart don't have to be limited to those mentioned or, depending on the needs, don’t have to include all the steps. In this method, the intents, possible prompts, and even the answers can all be induced from big training data sets with the help of the NLP (natural language processing) method. In this case, the user can use synonyms and different prompts for the same action/answer without the need of memorizing the triggering phrase.

In order to achieve this, NLP applications must be used after collecting natural language data from the speakers. To give an example, for an intent that will respond with the current weather in a given location, the data should include many possible ways to ask for a weather forecast with the limitations of the intent: "current" and "location." Some examples can be:

- How's the weather now?

- Tell me about the weather.

- Is it raining now?

At the same time, other sentences which should NOT trigger should be collected:

- How's the weather in Tokyo?

- Is it raining tomorrow?

The importance of Data in AI-Powered Chatbots

As the rules and intents will be induced from the real user data, the amount and quality of the data have great importance. What should be triggered and what should not be triggered will be decided by inducing the examples collected from the real users. Quality spot-checks are essential in gathering the data. Again, as in rule-based chatbots, accuracy and precision metrics can be used.

With the help of other NLP methods and natural language data, a chatbot can be taken a step further: conversation. For a chatbot to hold a conversation, it has to understand the context-dependent cues, such as pronouns. The following example demonstrates a conversation between the user and the chatbot where interpretation of, "there" depends on the previous prompt of the user:

- User: Where is the Eiffel Tower?

- Chatbot: The address is Champ de Mars, 5 Avenue Anatole France, 75007 Paris, France.

- User: How can I go there?

- Chatbot: Please follow the directions.

One Step Closer to a Virtual Assistant

What makes a chatbot closer to a virtual assistant is voice interaction and persona.

Hands-free modes brought a groundbreaking phase to the usages of devices and software. The freedom to carry out tasks or to retrieve information without typing introduced more users. To remove the chore of typing the prompt and to replace it with voice interactions, some other NLP methods must be used: automatic speech recognition (ASR) and text-to-speech (TTS).

ASR is what enables the prompt to be written on a visible screen or in a hidden layer that will be later sent to the pipeline of the chatbot. To improve the accuracy of the ASR, other NLP methods previously mentioned can be used. Once the prompt is taken by the chatbot, the steps explained above will be carried out, and the output will be generated again on a visible screen or in a hidden layer. The output will be read out loud to the user by making use of TTS technologies.

Voice interaction, especially the TTS phase, reveals the importance of a persona in a virtual assistant. Reading an answer out loud in a robotic tone could be a reason why users do not feel like they are interacting with their assistants but a computer program. Even though the knowledge that a virtual assistant is actually a computer program is shared among the users, hearing a voice with a persona makes the experience more realistic. Some virtual assistants are already offering different voices and personas for users to choose which one they feel the most comfortable with.

A persona is highly dependent on the local culture of the user. Some cultures may prefer more direct answers while others may tend to prefer more subtle answers. That is to say that the persona is not only the physical tone of the voice but it also requires market research about the linguistics clues in the culture. To obtain this information, the requirement is to collect natural language data from native speakers who can answer some basic questions. For example:

- How would you want the assistant to answer the prompt, "What is the weather like?"

- How would you grade the answer, "The weather is just perfect to go to a beach!"

- How would you grade the answer, "It is 30°C and sunny."

With the collected data, different personas can be developed for the same culture, and the choice will be left to the user.

How to Teach a Virtual Assistant a New Language

Enabling a chatbot or a virtual assistant to interact in a new language is relatively less complex once it is built for any language.

- Intent market research: Not every intent existing for one language is necessary for another. At the same time, there can be new intents needed for the new language. For example, a prompt to learn about the score in a type of sports game that is not existent in the local region may be deprioritized.

- Localization of existing possible prompts: The existing prompts should be localized into the new language by giving utmost importance to the natural usage. Eventually, the purpose is to catch as many prompts from the real users as possible.

- Editing the possible prompts list: Some prompts may not be used in the language, and some others may be missing in the data set. The list should be edited and updated by collecting natural language data from the native speakers.

- Editing the existing answers: The answers the chatbot or the virtual assistant will give once triggered should be localized into the new language. If necessary, some answers should be removed, and some others that are more appropriate to the language and the culture should be added. Again, a data set collected from the native speakers will guarantee success.

- Quality checkpoints and analysis: While collecting data, some part of it should be preserved to use as a test set. High quality in a chatbot or a virtual assistant can be achieved by going over accuracy and precision values and updating the training sets accordingly.

By DataForce